Billings understands the fears people have but notes that God is sovereign and “we know how the end of the book is written.” And while “the concerns around artificial intelligence are legitimate,” the questions that AI raises are very similar to questions Christians faced at the dawn of the internet. The internet has contributed to the spread of the gospel in many ways, and Billings believes that AI has the potential to do the same on a significantly greater scale.

“AI is going to dramatically increase the spread of the gospel if we think about it within the right architectures,” he said. “It can also expose people to harm…And so the right guidelines and guardrails need to be put around things.”

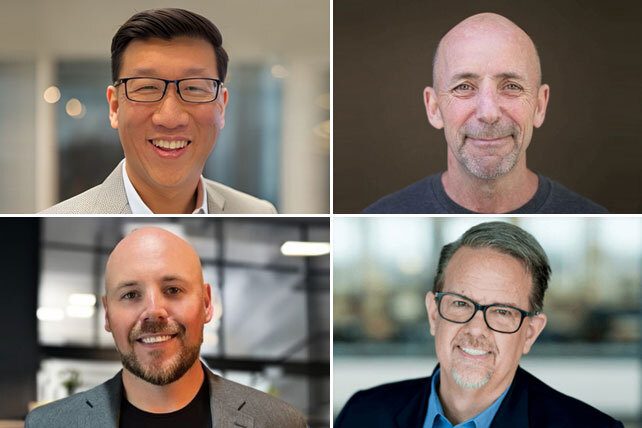

Jahng believes the impact of AI on society will be extraordinary. Compared to “the level of disruption of what the internet and websites have done—this is even larger than that in my mind,” he said. “The relevance that a church has is going to be so decimated if we don’t embrace AI at some point.”

There is a lesson Jahng believes we can learn from the development of social media. “I think society and culture, for the most part, has understood that social media and the evolution of social media platforms probably wasn’t net positive for all of us, right?” he said. “We know the algorithms are profit-motivated. It’s not for human flourishing. We look at that in hindsight now because it’s too late.”

“You saw late in the game there were entrepreneurial efforts to create Christian Facebooks,” Jahng said. “But in my mind, that was way too late, right? Facebook, Twitter, Instagram—They had mass audiences by that time.”

However, said Jahng, as the CEO of Microsoft has pointed out, “This innovation front is much earlier. The public has gotten hold of it much earlier than others.”

“For example, ChatGPT…has hallucinations,” he explained, meaning that AI at times presents false information. “It’s broken. It’s not perfect. Like, Apple would not release ChatGPT itself. Right? Because Apple needs to make everything perfect.”

RELATED: Mihretu Guta: How the Church Should Respond to the Ethical Dangers of AI

“Those hard questions are being asked about AI much earlier in this technology innovation front than most others that have preceded it,” said Jahng, and that is why events like the AI and the Church Hackathon are so important.

The hackathon is an example of the Church coming together “to ask those questions very early on. How might we…influence the public, secular, large language models that are being built and used by corporations?” Jahng asked. “But also how might we develop alternatives that might play well with them or clear alternatives for faith-based Christian worldview audiences?”

One of the alternatives that Gloo announced at the hackathon is the Christian Align Large Language Model (CALLM), “which is completely based on the principles of transparency and clarity,” Billings explained.

“ChatGPT is a closed environment…started by a company called OpenAI, but so much of their architecture is actually closed off,” he said. “You don’t have transparency to it. You don’t know what goes into it. You don’t understand the biases that are in it.”

For example, “When you ask a Christian question, you [wonder], what data related to Christianity do you [OpenAI] have that you’re going to use to answer my question? And then they’re not citing and sourcing.”

OpenAI, at least initially, “scraped content that was publicly accessible without the consent of the original creator of that content. They did not cite and source things correctly,” said Billings. “They did not provide enough clarity into how they were training their models and what biases maybe they were giving their models to use to respond to certain questions.”

Through a research initiative called Flourishing AI, Gloo has learned that people have a lack of trust in large AI models. “Lower levels of trust will result in lower levels of adoption, and lower levels of adoption will result in lower levels of impact,” Billings said. “And so we see the first problem to solve is to increase trust.”

In contrast to some other models, CALLM will be open source, “which means,” said Billings, “that we will take the entire code base starting in 2025, and we’ll put it online, and anybody can download the code base, and they can see every line of code that they want. They can inspect it; they can use it to hold us accountable.”