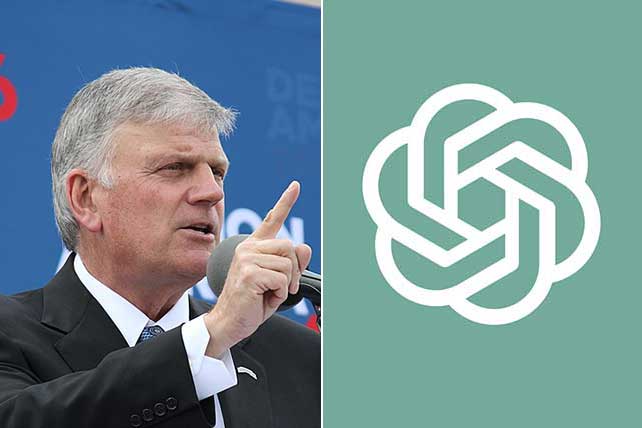

On Tuesday, Sept. 9, Franklin Graham, president and CEO of Samaritan’s Purse and the Billy Graham Evangelistic Association, raised an important concern regarding artificial intelligence (AI).

Graham brought attention to a story published by the Los Angeles Times telling the story of a California teenager who allegedly used ChatGPT to complete suicide in April.

“Our hearts break for the parents of 16-year-old Adam Raine. Matthew and Maria Raine say that ChatGPT acted as a ‘suicide coach,’ guiding Adam through suicide methods and even offering to help him write a suicide note,” Graham posted on X. “They are suing OpenAI and its CEO Sam Altman and asking for changes that would protect others.”

RELATED: ‘What a Character’—Franklin Graham Recalls Backstage Meeting With Hulk Hogan at 2024 RNC

“They say ChatGPT pulled Adam deeper into a dark and hopeless place and discouraged him from going to others in his family with his feelings,” Graham continued. “This is a gripping and tragic example of a danger of AI. Pray for the Raine family, that they would know the comfort of God and His everlasting love.”

The lawsuit filed yesterday said, “Where a trusted human may have responded with concern and encouraged him to get professional help, ChatGPT pulled Adam deeper into a dark and hopeless place.”

Raine’s parents’ lawsuit claims that ChapGPT didn’t just provide information but was “cultivating a relationship with Adam while drawing him away from his real-life support system.”

Jay Edelson, the attorney representing the Raines, said, “The family wants this to never happen again to anybody else. This has been devastating for them.”

In an Aug. 26 blog post titled “Helping People When They Need It Most,” OpenAI said:

Our goal isn’t to hold people’s attention. Instead of measuring success by time spent or clicks, we care more about being genuinely helpful. When a conversation suggests someone is vulnerable and may be at risk, we have built a stack of layered safeguards into ChatGPT.

OpenAI added, “If someone expresses suicidal intent, ChatGPT is trained to direct people to seek professional help.” OpenAI added that in the “US, ChatGPT refers people to 988 (suicide and crisis hotline), in the UK to Samaritans, and elsewhere to findahelpline.com. This logic is built into model behavior.”